EQLS is a digital space where people can speak to AI characters about anything they’d like. They help people learn about themselves and they help life get easier.

What is the problem

EQLs responds to the problem of increasing rates of anxiety in young people particularly in relation to anxiety caused by social media. For a young generation of people in the UK, social media is almost essential as a means to be connected and considered, particularly in the transition from education to more independent adult life, There have been many connections drawn between a rise in anxiety and the use of social media, largely attributing blame to the way it creates a culture where physical appearance and constant presence put everyone on a stage in front of their peers, all the time. For some, this is fine, for others it can lead to a perception that appearances must be relentlessly kept up and that not meeting social norms and fashions could result in exclusion or bullying.

In this context, people may technically be digitally connected to each other but feel unable to share feelings and stresses with one another. While mental health issues are now talked about more freely than they used to be, there is still a stigma associated with anxiety, which may leave many people feeling unsupported and isolated. Beyond simply not sharing these issues with others, it’s possible that many people absorb that stigma to the point where they don’t even self-reflect because of its association with mental health.

How ‘EQLS’ responds

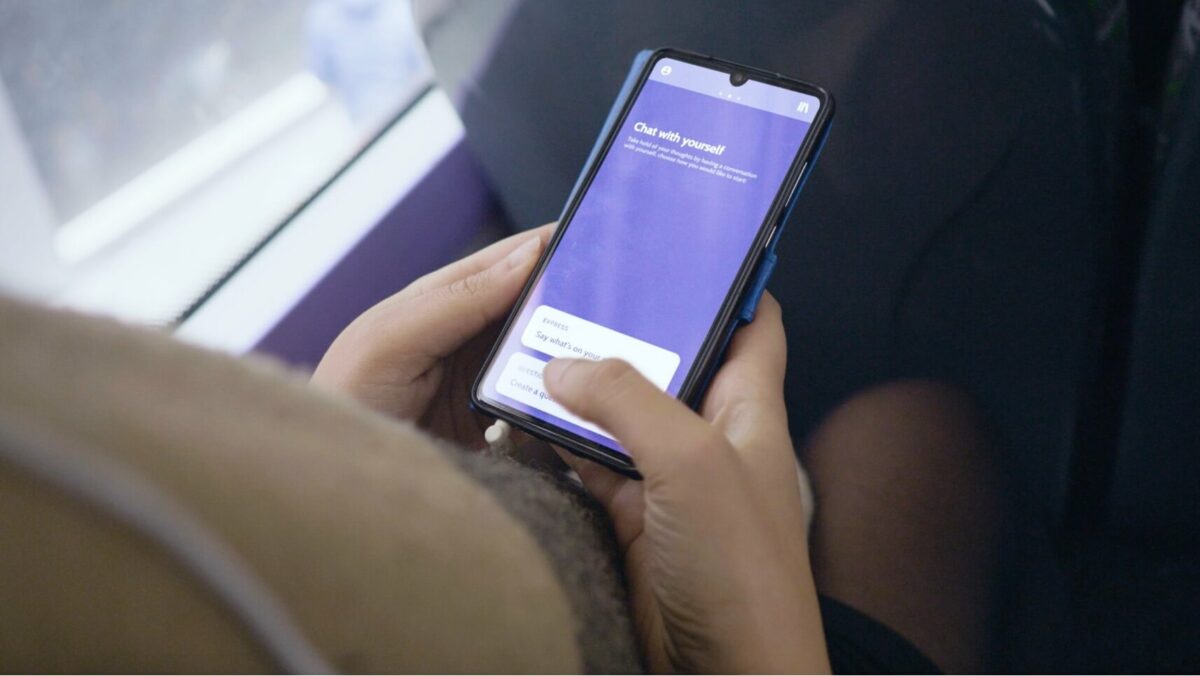

EQLS offers people a safe space to have whatever kinds of conversations they need without having to be concerned about social pressures. Although these AI characters are not real, people are able to process thoughts, and find some calm and comfort through the act of conversation. EQLS gradually learns the user and helps them learn about themselves and make plans in order to build a self-reflection practice, which will help them overcome anxiety and build emotional resilience.

A range of AI characters to talk with:

EQLS is the name of the service as well as the term for the AI characters. Each ‘EQL’ has a different character so that each user can decide who they would like to talk to depending on what kind of mood they are in. Whatever their mood, there is a character that will offer what they need and listen to whatever the user has to say without any judgement.

Track your mood:

EQLS is connected to the user’s wearables and devices and is therefore able to detect when someone might be in distress or becoming unhappy. In which case, the EQLS can intervene by suggesting that the user talks with them about whatever is happening in their life to help them reflect, understand and process their feelings better —or simply to relax talking about something else.

Learn about your patterns and make plans:

Over time, EQLS learns more about the user and enriches its knowledge through what the user talks about in the app. With this heightened knowledge, the service can begin to recognise patterns in someone’s mood connected to their behaviours, activities, or social life, and the service can draw the person’s attention to the pattern. This demonstration of patterns may help people see tangible examples of emotional influences in their life or they may help them learn about new influences. For example, someone may feel anxious and unhappy after every party they go to. In thi case, EQLS would demonstrate that and help the user make plans about how to adapt.

Relax completely:

Alongside conversational reflection techniques and awareness raising tools, some of the EQLS characters can also help the user to relax and unwind using mindfulness, meditation sessions or even sleep stories.

Chat with real people who have been through the same things as you:

Under particular circumstances, EQLS can put users in touch with one another so that they can share experiences. The match would be made when someone has had similar experiences to the other user in the past, but has come through that particular problem. In doing so, both parties are able to talk, reflect and support each other in a safe space, without judgement. Critically, they will be reminded that they can share things with real people as well as EQLS characters. This kind of matching would only be done where enough is known about a user and when the user is fully comfortable with the idea.

What we

learnt

We demonstrated a low fidelity prototype of EQLSto high-need users and this is what we learned:

- We found that people generally had a strong sense that this could be of help to them because they recognised the value of conversation (even if it was with an artificial character) and because they saw great value in using more personal data to give them new insights into their patterns and behaviours.

- However, they all expressed a range of opinions about how the app is positioned in terms of who the agents are, how seriously the process should be portrayed and whether it was a space only for mental health support.

How serious can it be?

Some users responded to this service by expressing that it was refreshing to see an app for people with mental health needs, which wasn’t purely devoted to the moments of struggle. They described that a lot of existing apps on the market are purely for when you are down and are only about learning and describing or analysing their issues. The problem with this is that these services only develop negative connotations and become a symbol of bad times and intense experiences. What was refreshing for them about EQLS is that it recognises people’s need to sometimes be more passive or less intense by either having access to sleep stories or just informal conversation that can be about whatever the user wants.

However, there was a division among users about how serious the service should feel. While some wanted to avoid too much intensity, others expressed that they would not take the process seriously, if it was anything other than a devoted emotionally therapeutic space. They felt that divulging intimate information required effort and investment, and for them to trust that this investment was worth it, they needed to respect the authority of the service. A respect that was damaged by the presence of lighthearted characteristics in the agents or more casual activities like sleep stories. They needed more seriousness, more obligation and more expectation on themselves in order to engage.

This issue represents a sensitivity that must be considered in any service of this nature. The overall positionality of the service (not just any interactive characters) must sit in a balanced framing. It somehow needs to invoke respect and authority in order to prompt significant investment from the user. At the same time, it can not be too demanding and intense because the service could become solely associated with negativity and hard work.

Embedded within this balancing act is an assumption that digital services must provide value more immediately, which strains interactions that typically require higher investment for higher intrinsic value gains. Theoretically, if a service produces value for someone, then they will come back. But what if the value a service produces is not immediately present, visible or understandable? Does this challenge demonstrate how an array of valid digital services may not yet be acceptable to the market? Do deeper benefits have to be smuggled in behind immediate gains? Are there preset, accepted levels of relationship that we can have with apps or with our phones?

Dependance vs Resilience

For some participants the proposition of speaking with artificial agents seemed unhelpfu,l but for many it seemed like a powerful alternative for the moments when they needed support. They described how speaking to AI agents represented a safer and a more constant support structure than the people in their lives because they could find support at any moment without feeling like a burden on someone else or risk being judged or having their trust betrayed.. Does this sentiment represent a lack of trust among their support network, an increased level of trust in technology or a growing level of willingness for people to engage in services that transparently leverage their own irrationalities for our own gain?

Some participants alluded to concerns about what this might mean over a longer period of time:

What would happen if people became used to or even dependent on artificial agents for emotional support?

How would people draw boundaries between artificial and real relationships? What would this mean for people’s human relationships?

Would people expect more or less of those around them or even just connect with them less?

If people only share their problems with AI agents would everyone feel like they were the only ones struggling? Would this really help people feel supported?

Overall, while the AI agents seemed highly valuable to people, the reception was dampened by a sense that it could easily escalate into a more harmful space where people’s other forms of support network, whether they be friends or therapists, might be impacted or replaced and therefore, ultimately diminish people’s resilience. So, to what extent should these conversation agents be anthropomorphised? And, what might a service like this do to correctly balance the user’s dependence on the service, with their dependence on the self and on those around the user?

Our new direction of exploration

If this proposition is taken further, the strategic question of relevance to our investigations is more along the lines of:

Is artificial, anthropomorphised character (or multiple characters) a good delivery mechanism for emotional support and how might this influence long term resilience?

Related to ‘EQLS’

Scenarios

Personal control

Devices quantify and measure all aspects of the people’s lives and body amplifying obsessive behaviours. People’s personal identity becomes more based on an idealised virtual version of oneself rather than your existing reality.